algorithms.clustering.imm¶

Module: algorithms.clustering.imm¶

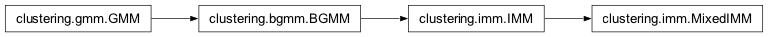

Inheritance diagram for nipy.algorithms.clustering.imm:

Infinite mixture model : A generalization of Bayesian mixture models with an unspecified number of classes

Classes¶

IMM¶

- class nipy.algorithms.clustering.imm.IMM(alpha=0.5, dim=1)¶

Bases:

BGMMThe class implements Infinite Gaussian Mixture model or Dirichlet Process Mixture model. This is simply a generalization of Bayesian Gaussian Mixture Models with an unknown number of classes.

- __init__(alpha=0.5, dim=1)¶

- Parameters:

- alpha: float, optional,

the parameter for cluster creation

- dim: int, optional,

the dimension of the the data

- Note: use the function set_priors() to set adapted priors

- average_log_like(x, tiny=1e-15)¶

returns the averaged log-likelihood of the mode for the dataset x

- Parameters:

- x: array of shape (n_samples,self.dim)

the data used in the estimation process

- tiny = 1.e-15: a small constant to avoid numerical singularities

- bayes_factor(x, z, nperm=0, verbose=0)¶

Evaluate the Bayes Factor of the current model using Chib’s method

- Parameters:

- x: array of shape (nb_samples,dim)

the data from which bic is computed

- z: array of shape (nb_samples), type = np.int_

the corresponding classification

- nperm=0: int

the number of permutations to sample to model the label switching issue in the computation of the Bayes Factor By default, exhaustive permutations are used

- verbose=0: verbosity mode

- Returns:

- bf (float) the computed evidence (Bayes factor)

Notes

See: Marginal Likelihood from the Gibbs Output Journal article by Siddhartha Chib; Journal of the American Statistical Association, Vol. 90, 1995

- bic(like, tiny=1e-15)¶

Computation of bic approximation of evidence

- Parameters:

- like, array of shape (n_samples, self.k)

component-wise likelihood

- tiny=1.e-15, a small constant to avoid numerical singularities

- Returns:

- the bic value, float

- check()¶

Checking the shape of sifferent matrices involved in the model

- check_x(x)¶

essentially check that x.shape[1]==self.dim

x is returned with possibly reshaping

- conditional_posterior_proba(x, z, perm=None)¶

Compute the probability of the current parameters of self given x and z

- Parameters:

- x: array of shape (nb_samples, dim),

the data from which bic is computed

- z: array of shape (nb_samples), type = np.int_,

the corresponding classification

- perm: array ok shape(nperm, self.k),typ=np.int_, optional

all permutation of z under which things will be recomputed By default, no permutation is performed

- cross_validated_update(x, z, plike, kfold=10)¶

This is a step in the sampling procedure that uses internal corss_validation

- Parameters:

- x: array of shape(n_samples, dim),

the input data

- z: array of shape(n_samples),

the associated membership variables

- plike: array of shape(n_samples),

the likelihood under the prior

- kfold: int, or array of shape(n_samples), optional,

folds in the cross-validation loop

- Returns:

- like: array od shape(n_samples),

the (cross-validated) likelihood of the data

- estimate(x, niter=100, delta=0.0001, verbose=0)¶

Estimation of the model given a dataset x

- Parameters:

- x array of shape (n_samples,dim)

the data from which the model is estimated

- niter=100: maximal number of iterations in the estimation process

- delta = 1.e-4: increment of data likelihood at which

convergence is declared

- verbose=0: verbosity mode

- Returns:

- bican asymptotic approximation of model evidence

- evidence(x, z, nperm=0, verbose=0)¶

See bayes_factor(self, x, z, nperm=0, verbose=0)

- guess_priors(x, nocheck=0)¶

Set the priors in order of having them weakly uninformative this is from Fraley and raftery; Journal of Classification 24:155-181 (2007)

- Parameters:

- x, array of shape (nb_samples,self.dim)

the data used in the estimation process

- nocheck: boolean, optional,

if nocheck==True, check is skipped

- guess_regularizing(x, bcheck=1)¶

Set the regularizing priors as weakly informative according to Fraley and raftery; Journal of Classification 24:155-181 (2007)

- Parameters:

- x array of shape (n_samples,dim)

the data used in the estimation process

- initialize(x)¶

initialize z using a k-means algorithm, then update the parameters

- Parameters:

- x: array of shape (nb_samples,self.dim)

the data used in the estimation process

- initialize_and_estimate(x, z=None, niter=100, delta=0.0001, ninit=1, verbose=0)¶

Estimation of self given x

- Parameters:

- x array of shape (n_samples,dim)

the data from which the model is estimated

- z = None: array of shape (n_samples)

a prior labelling of the data to initialize the computation

- niter=100: maximal number of iterations in the estimation process

- delta = 1.e-4: increment of data likelihood at which

convergence is declared

- ninit=1: number of initialization performed

to reach a good solution

- verbose=0: verbosity mode

- Returns:

- the best model is returned

- likelihood(x, plike=None)¶

return the likelihood of the model for the data x the values are weighted by the components weights

- Parameters:

- x: array of shape (n_samples, self.dim),

the data used in the estimation process

- plike: array of shape (n_samples), optional,

the density of each point under the prior

- Returns:

- like, array of shape (nbitem, self.k)

- component-wise likelihood

- likelihood_under_the_prior(x)¶

Computes the likelihood of x under the prior

- Parameters:

- x, array of shape (self.n_samples,self.dim)

- Returns:

- w, the likelihood of x under the prior model (unweighted)

- map_label(x, like=None)¶

return the MAP labelling of x

- Parameters:

- x array of shape (n_samples,dim)

the data under study

- like=None array of shape(n_samples,self.k)

component-wise likelihood if like==None, it is recomputed

- Returns:

- z: array of shape(n_samples): the resulting MAP labelling

of the rows of x

- mixture_likelihood(x)¶

Returns the likelihood of the mixture for x

- Parameters:

- x: array of shape (n_samples,self.dim)

the data used in the estimation process

- plugin(means, precisions, weights)¶

Set manually the weights, means and precision of the model

- Parameters:

- means: array of shape (self.k,self.dim)

- precisions: array of shape (self.k,self.dim,self.dim)

or (self.k, self.dim)

- weights: array of shape (self.k)

- pop(z)¶

compute the population, i.e. the statistics of allocation

- Parameters:

- z array of shape (nb_samples), type = np.int_

the allocation variable

- Returns:

- histarray shape (self.k) count variable

- probability_under_prior()¶

Compute the probability of the current parameters of self given the priors

- reduce(z)¶

Reduce the assignments by removing empty clusters and update self.k

- Parameters:

- z: array of shape(n),

a vector of membership variables changed in place

- Returns:

- z: the remapped values

- sample(x, niter=1, sampling_points=None, init=False, kfold=None, verbose=0)¶

sample the indicator and parameters

- Parameters:

- x: array of shape (n_samples, self.dim)

the data used in the estimation process

- niter: int,

the number of iterations to perform

- sampling_points: array of shape(nbpoints, self.dim), optional

points where the likelihood will be sampled this defaults to x

- kfold: int or array, optional,

parameter of cross-validation control by default, no cross-validation is used the procedure is faster but less accurate

- verbose=0: verbosity mode

- Returns:

- likelihood: array of shape(nbpoints)

total likelihood of the model

- sample_and_average(x, niter=1, verbose=0)¶

sample the indicator and parameters the average values for weights,means, precisions are returned

- Parameters:

- x = array of shape (nb_samples,dim)

the data from which bic is computed

- niter=1: number of iterations

- Returns:

- weights: array of shape (self.k)

- means: array of shape (self.k,self.dim)

- precisions: array of shape (self.k,self.dim,self.dim)

or (self.k, self.dim) these are the average parameters across samplings

Notes

All this makes sense only if no label switching as occurred so this is wrong in general (asymptotically).

fix: implement a permutation procedure for components identification

- sample_indicator(like)¶

Sample the indicator from the likelihood

- Parameters:

- like: array of shape (nbitem,self.k)

component-wise likelihood

- Returns:

- z: array of shape(nbitem): a draw of the membership variable

Notes

The behaviour is different from standard bgmm in that z can take arbitrary values

- set_constant_densities(prior_dens=None)¶

Set the null and prior densities as constant (assuming a compact domain)

- Parameters:

- prior_dens: float, optional

constant for the prior density

- set_priors(x)¶

Set the priors in order of having them weakly uninformative this is from Fraley and raftery; Journal of Classification 24:155-181 (2007)

- Parameters:

- x, array of shape (n_samples,self.dim)

the data used in the estimation process

- show(x, gd, density=None, axes=None)¶

Function to plot a GMM, still in progress Currently, works only in 1D and 2D

- Parameters:

- x: array of shape(n_samples, dim)

the data under study

- gd: GridDescriptor instance

- density: array os shape(prod(gd.n_bins))

density of the model one the discrete grid implied by gd by default, this is recomputed

- show_components(x, gd, density=None, mpaxes=None)¶

Function to plot a GMM – Currently, works only in 1D

- Parameters:

- x: array of shape(n_samples, dim)

the data under study

- gd: GridDescriptor instance

- density: array os shape(prod(gd.n_bins))

density of the model one the discrete grid implied by gd by default, this is recomputed

- mpaxes: axes handle to make the figure, optional,

if None, a new figure is created

- simple_update(x, z, plike)¶

This is a step in the sampling procedure

that uses internal corss_validation

- Parameters:

- x: array of shape(n_samples, dim),

the input data

- z: array of shape(n_samples),

the associated membership variables

- plike: array of shape(n_samples),

the likelihood under the prior

- Returns:

- like: array od shape(n_samples),

the likelihood of the data

- test(x, tiny=1e-15)¶

Returns the log-likelihood of the mixture for x

- Parameters:

- x array of shape (n_samples,self.dim)

the data used in the estimation process

- Returns:

- ll: array of shape(n_samples)

the log-likelihood of the rows of x

- train(x, z=None, niter=100, delta=0.0001, ninit=1, verbose=0)¶

Idem initialize_and_estimate

- unweighted_likelihood(x)¶

return the likelihood of each data for each component the values are not weighted by the component weights

- Parameters:

- x: array of shape (n_samples,self.dim)

the data used in the estimation process

- Returns:

- like, array of shape(n_samples,self.k)

unweighted component-wise likelihood

Notes

Hopefully faster

- unweighted_likelihood_(x)¶

return the likelihood of each data for each component the values are not weighted by the component weights

- Parameters:

- x: array of shape (n_samples,self.dim)

the data used in the estimation process

- Returns:

- like, array of shape(n_samples,self.k)

unweighted component-wise likelihood

- update(x, z)¶

Update function (draw a sample of the IMM parameters)

- Parameters:

- x array of shape (n_samples,self.dim)

the data used in the estimation process

- z array of shape (n_samples), type = np.int_

the corresponding classification

- update_means(x, z)¶

Given the allocation vector z, and the corresponding data x, resample the mean

- Parameters:

- x: array of shape (nb_samples,self.dim)

the data used in the estimation process

- z: array of shape (nb_samples), type = np.int_

the corresponding classification

- update_precisions(x, z)¶

Given the allocation vector z, and the corresponding data x, resample the precisions

- Parameters:

- x array of shape (nb_samples,self.dim)

the data used in the estimation process

- z array of shape (nb_samples), type = np.int_

the corresponding classification

- update_weights(z)¶

Given the allocation vector z, resmaple the weights parameter

- Parameters:

- z array of shape (n_samples), type = np.int_

the allocation variable

MixedIMM¶

- class nipy.algorithms.clustering.imm.MixedIMM(alpha=0.5, dim=1)¶

Bases:

IMMParticular IMM with an additional null class. The data is supplied together with a sample-related probability of being under the null.

- __init__(alpha=0.5, dim=1)¶

- Parameters:

- alpha: float, optional,

the parameter for cluster creation

- dim: int, optional,

the dimension of the the data

- Note: use the function set_priors() to set adapted priors

- average_log_like(x, tiny=1e-15)¶

returns the averaged log-likelihood of the mode for the dataset x

- Parameters:

- x: array of shape (n_samples,self.dim)

the data used in the estimation process

- tiny = 1.e-15: a small constant to avoid numerical singularities

- bayes_factor(x, z, nperm=0, verbose=0)¶

Evaluate the Bayes Factor of the current model using Chib’s method

- Parameters:

- x: array of shape (nb_samples,dim)

the data from which bic is computed

- z: array of shape (nb_samples), type = np.int_

the corresponding classification

- nperm=0: int

the number of permutations to sample to model the label switching issue in the computation of the Bayes Factor By default, exhaustive permutations are used

- verbose=0: verbosity mode

- Returns:

- bf (float) the computed evidence (Bayes factor)

Notes

See: Marginal Likelihood from the Gibbs Output Journal article by Siddhartha Chib; Journal of the American Statistical Association, Vol. 90, 1995

- bic(like, tiny=1e-15)¶

Computation of bic approximation of evidence

- Parameters:

- like, array of shape (n_samples, self.k)

component-wise likelihood

- tiny=1.e-15, a small constant to avoid numerical singularities

- Returns:

- the bic value, float

- check()¶

Checking the shape of sifferent matrices involved in the model

- check_x(x)¶

essentially check that x.shape[1]==self.dim

x is returned with possibly reshaping

- conditional_posterior_proba(x, z, perm=None)¶

Compute the probability of the current parameters of self given x and z

- Parameters:

- x: array of shape (nb_samples, dim),

the data from which bic is computed

- z: array of shape (nb_samples), type = np.int_,

the corresponding classification

- perm: array ok shape(nperm, self.k),typ=np.int_, optional

all permutation of z under which things will be recomputed By default, no permutation is performed

- cross_validated_update(x, z, plike, null_class_proba, kfold=10)¶

This is a step in the sampling procedure that uses internal corss_validation

- Parameters:

- x: array of shape(n_samples, dim),

the input data

- z: array of shape(n_samples),

the associated membership variables

- plike: array of shape(n_samples),

the likelihood under the prior

- kfold: int, optional, or array

number of folds in cross-validation loop or set of indexes for the cross-validation procedure

- null_class_proba: array of shape(n_samples),

prior probability to be under the null

- Returns:

- like: array od shape(n_samples),

the (cross-validated) likelihood of the data

- z: array of shape(n_samples),

the associated membership variables

Notes

When kfold is an array, there is an internal reshuffling to randomize the order of updates

- estimate(x, niter=100, delta=0.0001, verbose=0)¶

Estimation of the model given a dataset x

- Parameters:

- x array of shape (n_samples,dim)

the data from which the model is estimated

- niter=100: maximal number of iterations in the estimation process

- delta = 1.e-4: increment of data likelihood at which

convergence is declared

- verbose=0: verbosity mode

- Returns:

- bican asymptotic approximation of model evidence

- evidence(x, z, nperm=0, verbose=0)¶

See bayes_factor(self, x, z, nperm=0, verbose=0)

- guess_priors(x, nocheck=0)¶

Set the priors in order of having them weakly uninformative this is from Fraley and raftery; Journal of Classification 24:155-181 (2007)

- Parameters:

- x, array of shape (nb_samples,self.dim)

the data used in the estimation process

- nocheck: boolean, optional,

if nocheck==True, check is skipped

- guess_regularizing(x, bcheck=1)¶

Set the regularizing priors as weakly informative according to Fraley and raftery; Journal of Classification 24:155-181 (2007)

- Parameters:

- x array of shape (n_samples,dim)

the data used in the estimation process

- initialize(x)¶

initialize z using a k-means algorithm, then update the parameters

- Parameters:

- x: array of shape (nb_samples,self.dim)

the data used in the estimation process

- initialize_and_estimate(x, z=None, niter=100, delta=0.0001, ninit=1, verbose=0)¶

Estimation of self given x

- Parameters:

- x array of shape (n_samples,dim)

the data from which the model is estimated

- z = None: array of shape (n_samples)

a prior labelling of the data to initialize the computation

- niter=100: maximal number of iterations in the estimation process

- delta = 1.e-4: increment of data likelihood at which

convergence is declared

- ninit=1: number of initialization performed

to reach a good solution

- verbose=0: verbosity mode

- Returns:

- the best model is returned

- likelihood(x, plike=None)¶

return the likelihood of the model for the data x the values are weighted by the components weights

- Parameters:

- x: array of shape (n_samples, self.dim),

the data used in the estimation process

- plike: array of shape (n_samples), optional,

the density of each point under the prior

- Returns:

- like, array of shape (nbitem, self.k)

- component-wise likelihood

- likelihood_under_the_prior(x)¶

Computes the likelihood of x under the prior

- Parameters:

- x, array of shape (self.n_samples,self.dim)

- Returns:

- w, the likelihood of x under the prior model (unweighted)

- map_label(x, like=None)¶

return the MAP labelling of x

- Parameters:

- x array of shape (n_samples,dim)

the data under study

- like=None array of shape(n_samples,self.k)

component-wise likelihood if like==None, it is recomputed

- Returns:

- z: array of shape(n_samples): the resulting MAP labelling

of the rows of x

- mixture_likelihood(x)¶

Returns the likelihood of the mixture for x

- Parameters:

- x: array of shape (n_samples,self.dim)

the data used in the estimation process

- plugin(means, precisions, weights)¶

Set manually the weights, means and precision of the model

- Parameters:

- means: array of shape (self.k,self.dim)

- precisions: array of shape (self.k,self.dim,self.dim)

or (self.k, self.dim)

- weights: array of shape (self.k)

- pop(z)¶

compute the population, i.e. the statistics of allocation

- Parameters:

- z array of shape (nb_samples), type = np.int_

the allocation variable

- Returns:

- histarray shape (self.k) count variable

- probability_under_prior()¶

Compute the probability of the current parameters of self given the priors

- reduce(z)¶

Reduce the assignments by removing empty clusters and update self.k

- Parameters:

- z: array of shape(n),

a vector of membership variables changed in place

- Returns:

- z: the remapped values

- sample(x, null_class_proba, niter=1, sampling_points=None, init=False, kfold=None, co_clustering=False, verbose=0)¶

sample the indicator and parameters

- Parameters:

- x: array of shape (n_samples, self.dim),

the data used in the estimation process

- null_class_proba: array of shape(n_samples),

the probability to be under the null

- niter: int,

the number of iterations to perform

- sampling_points: array of shape(nbpoints, self.dim), optional

points where the likelihood will be sampled this defaults to x

- kfold: int, optional,

parameter of cross-validation control by default, no cross-validation is used the procedure is faster but less accurate

- co_clustering: bool, optional

if True, return a model of data co-labelling across iterations

- verbose=0: verbosity mode

- Returns:

- likelihood: array of shape(nbpoints)

total likelihood of the model

- pproba: array of shape(n_samples),

the posterior of being in the null (the posterior of null_class_proba)

- coclust: only if co_clustering==True,

sparse_matrix of shape (n_samples, n_samples), frequency of co-labelling of each sample pairs across iterations

- sample_and_average(x, niter=1, verbose=0)¶

sample the indicator and parameters the average values for weights,means, precisions are returned

- Parameters:

- x = array of shape (nb_samples,dim)

the data from which bic is computed

- niter=1: number of iterations

- Returns:

- weights: array of shape (self.k)

- means: array of shape (self.k,self.dim)

- precisions: array of shape (self.k,self.dim,self.dim)

or (self.k, self.dim) these are the average parameters across samplings

Notes

All this makes sense only if no label switching as occurred so this is wrong in general (asymptotically).

fix: implement a permutation procedure for components identification

- sample_indicator(like, null_class_proba)¶

sample the indicator from the likelihood

- Parameters:

- like: array of shape (nbitem,self.k)

component-wise likelihood

- null_class_proba: array of shape(n_samples),

prior probability to be under the null

- Returns:

- z: array of shape(nbitem): a draw of the membership variable

Notes

Here z=-1 encodes for the null class

- set_constant_densities(null_dens=None, prior_dens=None)¶

Set the null and prior densities as constant (over a supposedly compact domain)

- Parameters:

- null_dens: float, optional

constant for the null density

- prior_dens: float, optional

constant for the prior density

- set_priors(x)¶

Set the priors in order of having them weakly uninformative this is from Fraley and raftery; Journal of Classification 24:155-181 (2007)

- Parameters:

- x, array of shape (n_samples,self.dim)

the data used in the estimation process

- show(x, gd, density=None, axes=None)¶

Function to plot a GMM, still in progress Currently, works only in 1D and 2D

- Parameters:

- x: array of shape(n_samples, dim)

the data under study

- gd: GridDescriptor instance

- density: array os shape(prod(gd.n_bins))

density of the model one the discrete grid implied by gd by default, this is recomputed

- show_components(x, gd, density=None, mpaxes=None)¶

Function to plot a GMM – Currently, works only in 1D

- Parameters:

- x: array of shape(n_samples, dim)

the data under study

- gd: GridDescriptor instance

- density: array os shape(prod(gd.n_bins))

density of the model one the discrete grid implied by gd by default, this is recomputed

- mpaxes: axes handle to make the figure, optional,

if None, a new figure is created

- simple_update(x, z, plike, null_class_proba)¶

One step in the sampling procedure (one data sweep)

- Parameters:

- x: array of shape(n_samples, dim),

the input data

- z: array of shape(n_samples),

the associated membership variables

- plike: array of shape(n_samples),

the likelihood under the prior

- null_class_proba: array of shape(n_samples),

prior probability to be under the null

- Returns:

- like: array od shape(n_samples),

the likelihood of the data under the H1 hypothesis

- test(x, tiny=1e-15)¶

Returns the log-likelihood of the mixture for x

- Parameters:

- x array of shape (n_samples,self.dim)

the data used in the estimation process

- Returns:

- ll: array of shape(n_samples)

the log-likelihood of the rows of x

- train(x, z=None, niter=100, delta=0.0001, ninit=1, verbose=0)¶

Idem initialize_and_estimate

- unweighted_likelihood(x)¶

return the likelihood of each data for each component the values are not weighted by the component weights

- Parameters:

- x: array of shape (n_samples,self.dim)

the data used in the estimation process

- Returns:

- like, array of shape(n_samples,self.k)

unweighted component-wise likelihood

Notes

Hopefully faster

- unweighted_likelihood_(x)¶

return the likelihood of each data for each component the values are not weighted by the component weights

- Parameters:

- x: array of shape (n_samples,self.dim)

the data used in the estimation process

- Returns:

- like, array of shape(n_samples,self.k)

unweighted component-wise likelihood

- update(x, z)¶

Update function (draw a sample of the IMM parameters)

- Parameters:

- x array of shape (n_samples,self.dim)

the data used in the estimation process

- z array of shape (n_samples), type = np.int_

the corresponding classification

- update_means(x, z)¶

Given the allocation vector z, and the corresponding data x, resample the mean

- Parameters:

- x: array of shape (nb_samples,self.dim)

the data used in the estimation process

- z: array of shape (nb_samples), type = np.int_

the corresponding classification

- update_precisions(x, z)¶

Given the allocation vector z, and the corresponding data x, resample the precisions

- Parameters:

- x array of shape (nb_samples,self.dim)

the data used in the estimation process

- z array of shape (nb_samples), type = np.int_

the corresponding classification

- update_weights(z)¶

Given the allocation vector z, resmaple the weights parameter

- Parameters:

- z array of shape (n_samples), type = np.int_

the allocation variable

Functions¶

- nipy.algorithms.clustering.imm.co_labelling(z, kmax=None, kmin=None)¶

return a sparse co-labelling matrix given the label vector z

- Parameters:

- z: array of shape(n_samples),

the input labels

- kmax: int, optional,

considers only the labels in the range [0, kmax[

- Returns:

- colabel: a sparse coo_matrix,

yields the co labelling of the data i.e. c[i,j]= 1 if z[i]==z[j], 0 otherwise

- nipy.algorithms.clustering.imm.main()¶

Illustrative example of the behaviour of imm